SCAMPI Quick Start Guide

1. Introduction

The SCAMPI tools are HPCMP command line utilities to accelerate data transfer and are currently installed on most HPCMP systems at /app/mpiutil. There are three variants: SCAMPI, ISCAMPI, and HISCAMPI. Due to overhead and set up requirements, SCAMPI should only be used when needing to copy more than 1 GB among the HPCMP systems. Smaller transfers should use scp or mpscp.

SCAMPI accelerates intersystem data movement through the use of multiple streams over multiple IPs across a LAN/WAN. Paired processes are established across the network to read-send-receive-write data so actual data streams are host*threads/2. Authentication is based on HPCMP Kerberos and by default, data streams and metadata are encrypted.

Internal SCAMPI (ISCAMPI) is a system command line utility run internal to batch jobs on a given system (no external communication or authentication). Parallel nodes and threads help to accelerate the movement of data internal to an HPC system using native compilers/libraries and high-speed networks. The ISCAMPI command is typically used in-line in your batch job and usually run on a subset of job allocated nodes. Processes in ISCAMPI both read and write data so the number of data streams is hosts*threads.

Hybrid SCAMPI (HISCAMPI) is a system utility to run across login nodes when moving files to/from your $CENTER or $ARCHIVE directories into, or out of, an HPC system directory ($HOME, $WORKDIR, $PROJECT). Hiscampi is closely related to iscampi but contains special NFS file system handling methods to enable parallel access into NFS. Additionally, HISCAMPI is run on login nodes similar to SCAMPI.

2. scampi

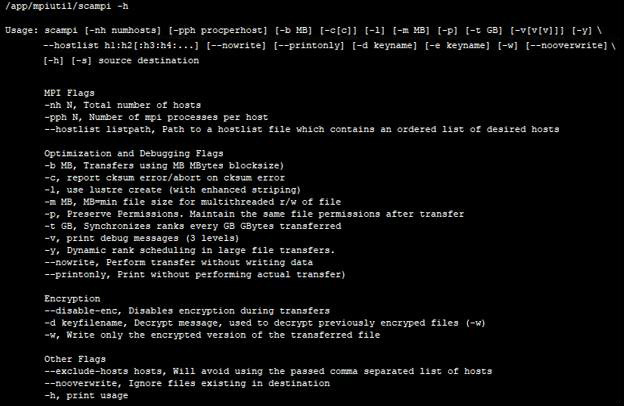

2.1. Syntax

To call the run script, /app/mpiutil/scampi, use -nh (num hosts) to specify the total number of hosts you wish to use in your transfer. Use the -pph (processes per host) flag to specify the number of processes/threads you wish for each host to run. The remaining syntax is modeled after the scp command. Specify paths to or from the remote system using remotehost:desired path. To specify paths on the local system, simply specify desired path.

2.2. Examples

To clarify, here are some examples on how to use the code.

This example invokes a transfer from the home directory on the local system to the home

directory on SCOUT using 2 nodes with 5 threads each. There are a total of 2 nodes involved;

one on the sending system and one on the receiving system. So in this example, there will be

1*5=5 streams of data.

/app/mpiutil/scampi -nh 2 -pph 5 ~/myfile centennial:~

The next example invokes a transfer from the home directory on the local system to the home

directory on Onyx using 4 nodes with 8 threads. There are a total of 4 nodes involved; two on

the sending system and two for the receiving system. So in this example there will be 2*8=16

streams of data.

/app/mpiutil/scampi -nh 4 -pph 8 ~/myfile onyx.erdc.hpc.mil:~

2.3. Tips

- The first time you use SCAMPI, you will likely need to manually approve of additions to your ~/.ssh/known_hosts file (this allows ssh to suppress subsequent prompts from the target host system).

- Typically, using more than 8 threads per node shows diminishing returns on transfer times.

- Use -y flag to utilize the dynamic load balancing heuristic. This is faster for unstable environments caused by factors such as busy login nodes or WAN transports.

- Specify a value for the -m flag to decide which files to split before transferring

in parallel. The following example will split any files larger than 512 MB.

/app/mpiutil/scampi -nh 2 -pph 4 -m 512 ~/mydir centennial:~ - Use the -l flag when destination is a Lustre file system to get parallel open and striping.

- It is not uncommon for scampi to fail during the initial set up of the communication due to local or remote login nodes being down or busy (nodes are selected randomly). More than three iterative startup failures, however, should be reported to the help desk.

- Use the -h flag for a list of all currently available options with a short description.

3. iscampi

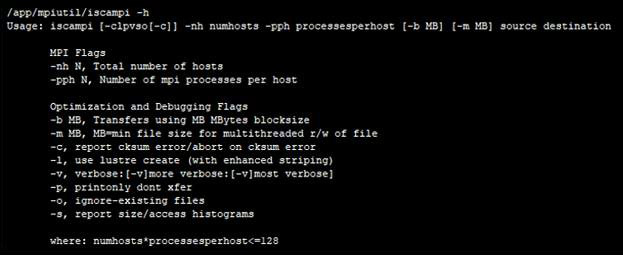

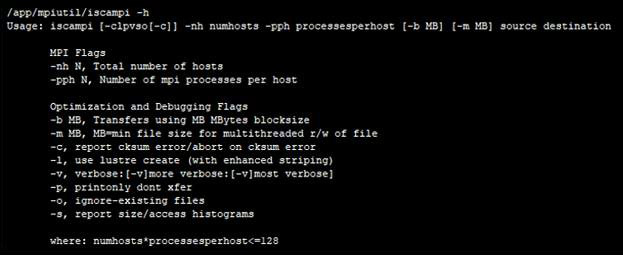

3.1. Syntax

Call the run script, iscampi. Use -nh (num hosts) to specify the total number of hosts you wish to use in your transfer. Use the -pph (processes per host) flag to specify the number of processes/threads you wish for each host to run (nh*pph<=128). The remaining syntax is modeled after the scp command. However, there is no host information allowed as nodes will be selected from your batch pool in your job. The -l option should always be used when targeting Lustre file systems as striping parameters will be invoked. Other options include:

| Option | Description |

|---|---|

| -s | Histogram summary of file size/age |

| -b x | Use x MB block size for reading/writing |

| -m x | Use x MB file size to invoke gang reading/writing |

| -o | Don't write over existing files |

3.2. Examples

Transfer from home directory on local system to $WORKDIR on the local system using 4 nodes with 6 threads (4*6=24 threads), where $WORKDIR is a Lustre file system (-l), and provide a summary histogram of the files (-s: size/age histograms) using shared version of iscampi:

/app/mpiutil/iscampi -l -s -nh 4 -pph 6 $HOME $WORKDIR

Transfer from $WORKDIR on local system to $HOME/results on the local system using 6 nodes (-nh 6), each with 6 threads (-pph 6), where $HOME is a Lustre file system (-l), using a block size of 16 MB (-b 16):

/app/mpiutil/iscampi -l -b 16 -nh 6 -pph 6 $WORKDIR $HOME/results

3.3. Tips

- Typically, using more than 8 threads per node shows diminishing returns on transfer times.

- Specify a value for the -m flag to select which files to transfer via parallel

streams. The following example will split any files larger than 512 MB. Files below 512 MB will

be moved in one stream.

/app/mpiutil/iscampi -nh 2 -pph 4 -m 512 ~/mydir centennial:~ - Use the -l flag when destination is a Lustre file system to get parallel open and striping.

- Use the -h flag for a list of all currently available options with a short description.

4. hiscampi

4.1. Syntax

See iscampi syntax

4.2. Examples

Transfer from $CENTER on local system to $WORKDIR on the local system using 4 nodes with 6 threads (4*6=24 threads), where $WORKDIR is a Lustre file system (-l), and provide a summary histogram of the files (-s: size/age histograms) using shared version of iscampi:

/app/mpiutil/hiscampi -l -s -nh 4 -pph 6 $CENTER $WORKDIR

Transfer from $WORKDIR on local system to $ARCHIVE/results on the system using 6 nodes (-nh 6), each with 6 threads (-pph 6), using a block size of 16 MB (-b 16) and provide a summary histogram of the files (-s: size/age histograms):

/app/mpiutil/hiscampi -s -b 16 -nh 6 -pph 6 $WORKDIR/results $ARCHIVE/results